At Scalytics, we’ve always believed that the next frontier of AI lies in open collaboration, transparent infrastructure, and developer-first tooling. Today, we’re thrilled to unveil Scalytics Connect – Community Edition, our full enterprise-grade inference platform released under the Apache 2.0 license. What was once a turnkey, self-hosted engine behind Fortune-500 deployments is now freely available for you to clone, customize, and extend as your own private AI operation stack.

Why Community Matters

Enterprises demand high throughput, sub-second latency, and ironclad security when running large language and vision models. Yet developers crave the freedom to innovate—spin up new models, craft custom agents, and integrate AI directly into the tools they use every day. Community Edition bridges that gap:

- Zero Vendor Lock-In: Own your hardware, your data, and your AI roadmap.

- OpenAI-Compatible API: Drop-in support for

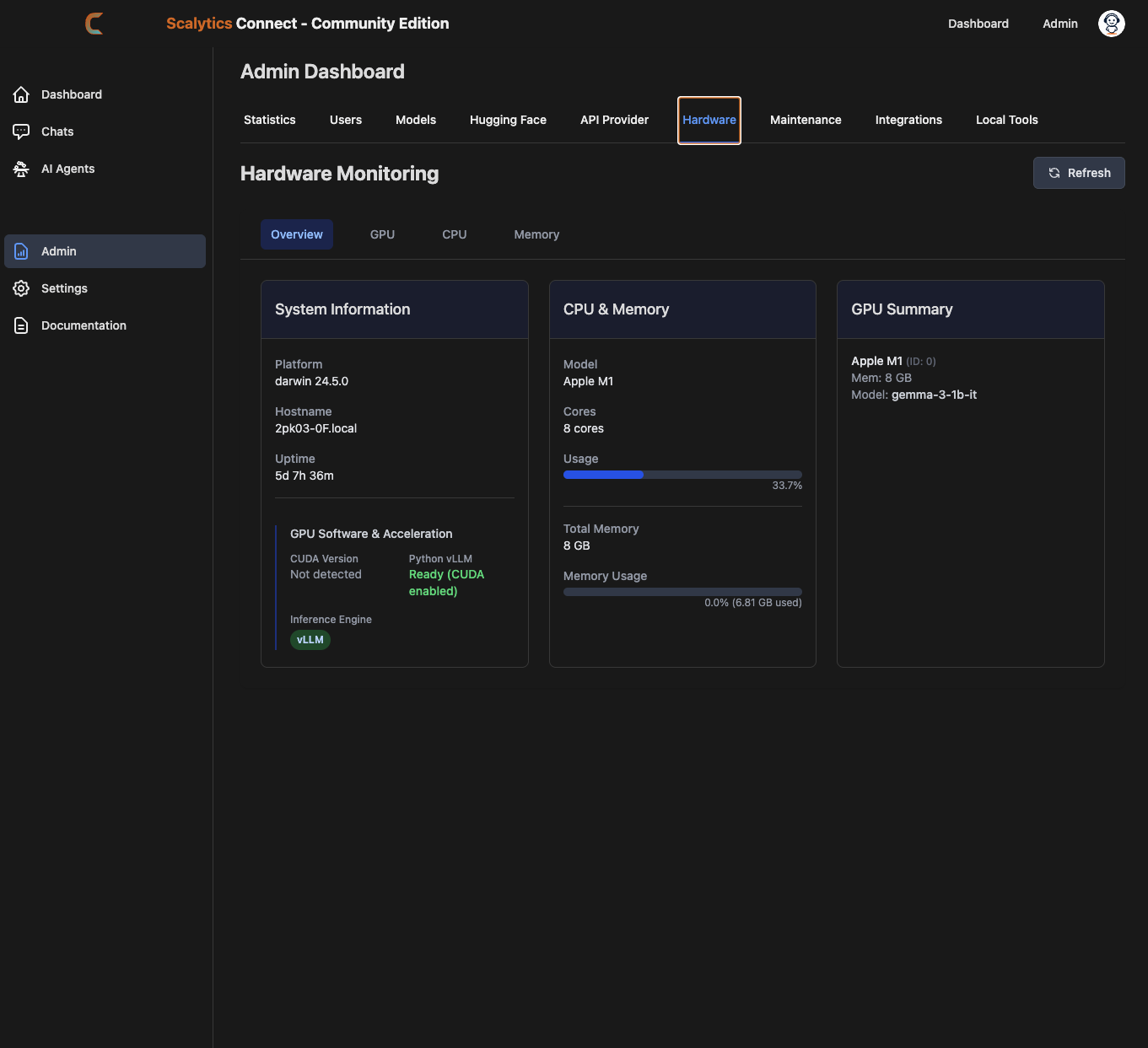

/v1/chat/completionsand/v1/images/generationslets you map existing tools—VS Code plugins, Python scripts, or webhooks—straight to your self-hosted endpoint. - Modular Architecture: vLLM-driven inference, vector services, Live Search SSE streams, and GPU monitoring live in separate, easy-to-extend services.

By open-sourcing our entire stack, we’re inviting you to contribute new backends, refine rate-limiting strategies, or prototype novel modalities—audio, video, you name it.

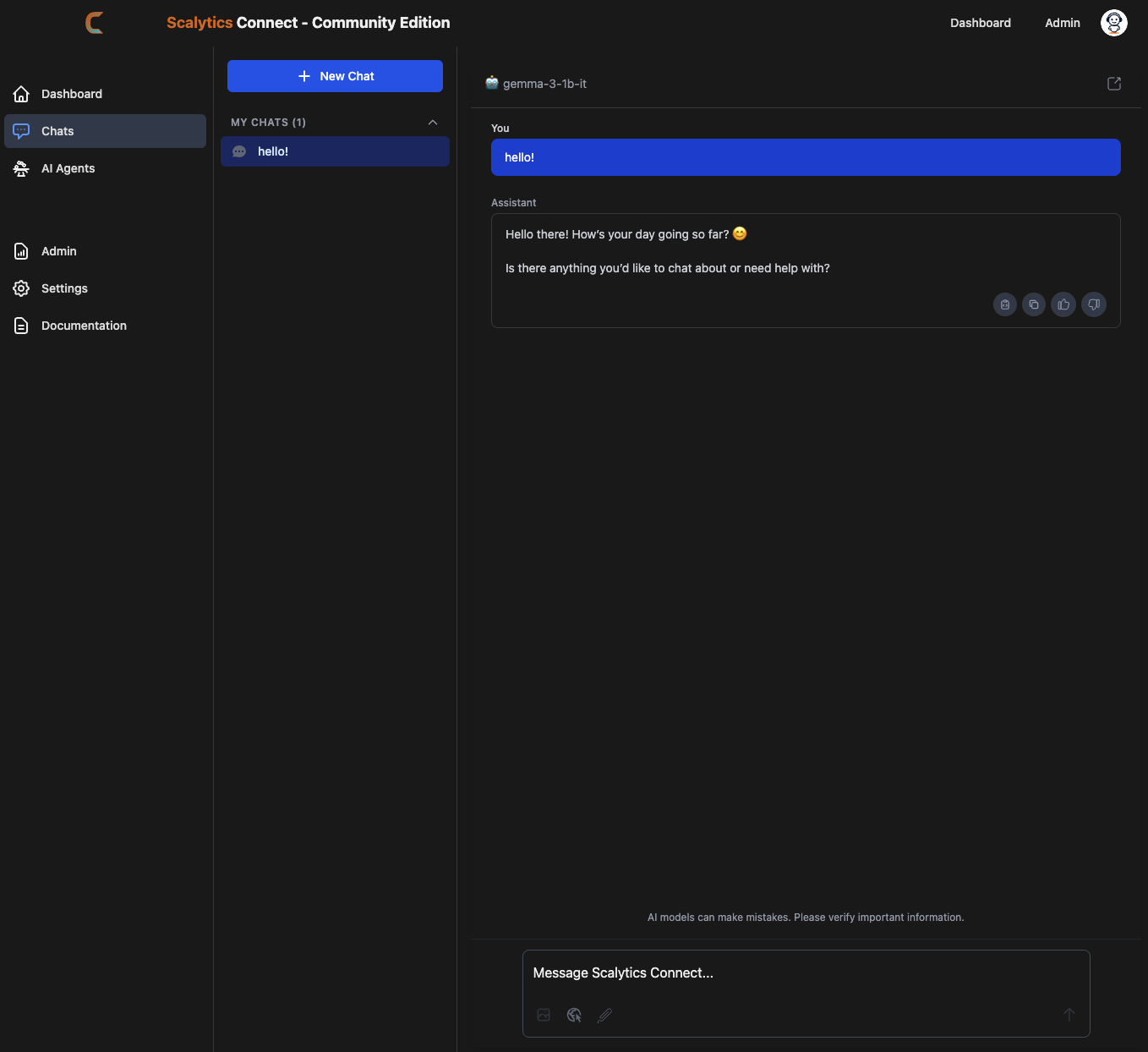

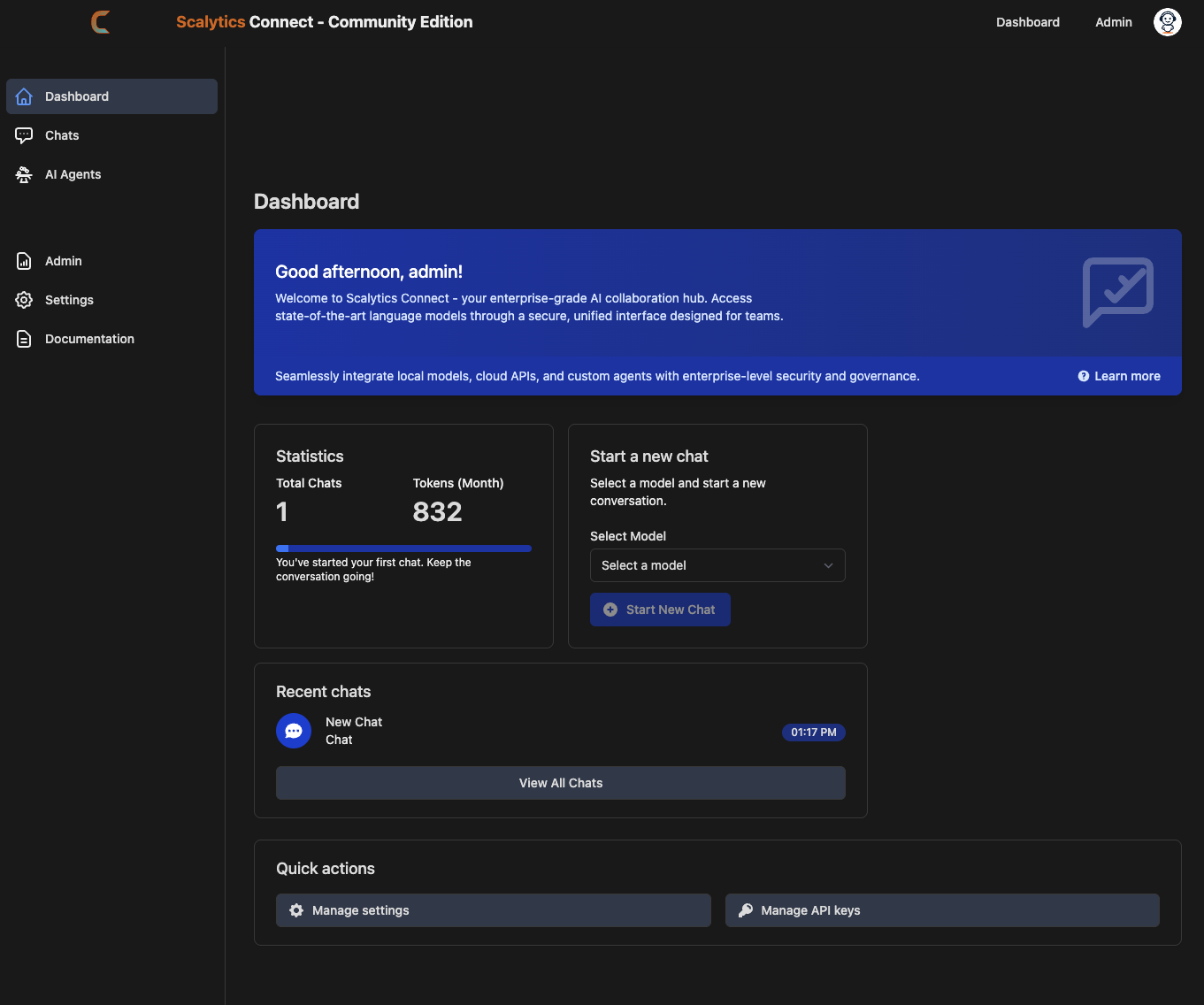

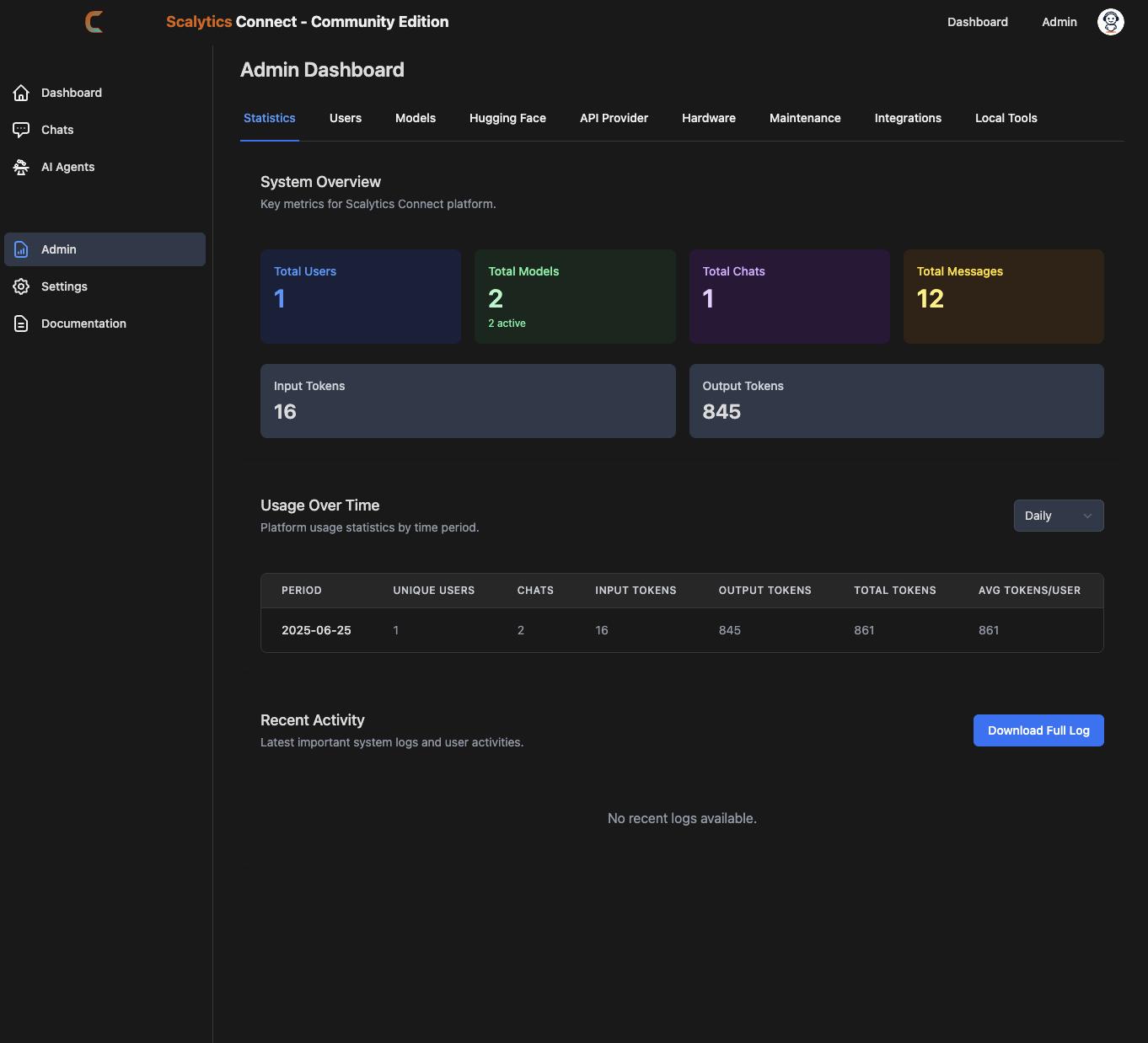

How does it look like?

What’s Inside the Repo

When you clone github.com/scalytics/Scalytics-Community-Edition, you’ll find:

- Admin UI & Orchestration Scripts

Spin up the control plane in minutes with./start-app.sh. Manage API keys, toggle rate limits, and assign your preferred local model as “Active.” - OpenAI-Compatible Endpoints

All chat and image-generation APIs mirror the OpenAI request/response format. Whether you’re streaming token by token or generating base64-encoded images, the integration is seamless. - Deep Search & Vector Service

Orchestrate multi-step research workflows via/v1/deepsearch, leverage embeddings at/v1/vector/embeddings, and build secure, precise knowledge-graph applications. - vLLM Inference Engine

Our high-throughput, memory-efficient serving layer dynamically adapts to each model’s full context window—ensuring you never hit silent truncation or context-size errors. - Comprehensive Docs & Samples

From integrating with IDE extensions like Cline to powering custom chatbots, our/docsfolder teaches you best practices for prompt design, temperature tuning, and iterative refinement.

Quickstart in Three Commands

Ready to run? Just clone the official repo via https://github.com/scalytics/Scalytics-Community-Edition.git and run ./start.app. Follow pur Readme for more tweaks and how-to.

Join the Community

Scalytics Connect – Community Edition isn't just code; it's a community. Fork the repository, raise issues, share custom model bundles, or propose pull requests to improve our vector indexer, add new SSE events, or integrate new LLM backends.We combine the stability of enterprise software with the flexibility of open-source software. This allows developers to build the future of private, scalable AI according to their preferences and under their control.

About Scalytics

Our founding team created Apache Wayang (now an Apache Top-Level Project), the federated execution framework that orchestrates Spark, Flink, and TensorFlow where data lives and reduces ETL movement overhead.

We also invented and actively maintain KafScale (S3-Kafka-streaming platform), a Kafka-compatible, stateless data and large object streaming system designed for Kubernetes and object storage backends. Elastic compute. No broker babysitting. No lock-in.

Our mission: Data stays in place. Compute comes to you. From data lakehousese to private AI deployment and distributed ML - all designed for security, compliance, and production resilience.

Questions? Join our open Slack community or schedule a consult.