Why Traditional Business Intelligence Falls Short

Many companies still rely on data warehouses or data lakes designed for batch reporting rather than real time analysis. Historical reporting remains important, but the underlying architecture delays insight. Data is collected, transformed and processed in batches, often hours or days after creation, even when event streaming is used inside ETL workloads.

CDC often creates the illusion of event driven behavior, while the actual system still operates in delayed cycles.

This raises a simple question.

Can these systems perform real time risk detection or anomaly detection in daily operations?

Can they support dynamic process optimization or adapt to changing conditions?

The answer is no.

This is where Streaming Intelligence becomes essential. It links business relevant events with operational IT signals in real time without detouring through a warehouse.

What Is Streaming Intelligence? (Real-Time Analytics Explained)

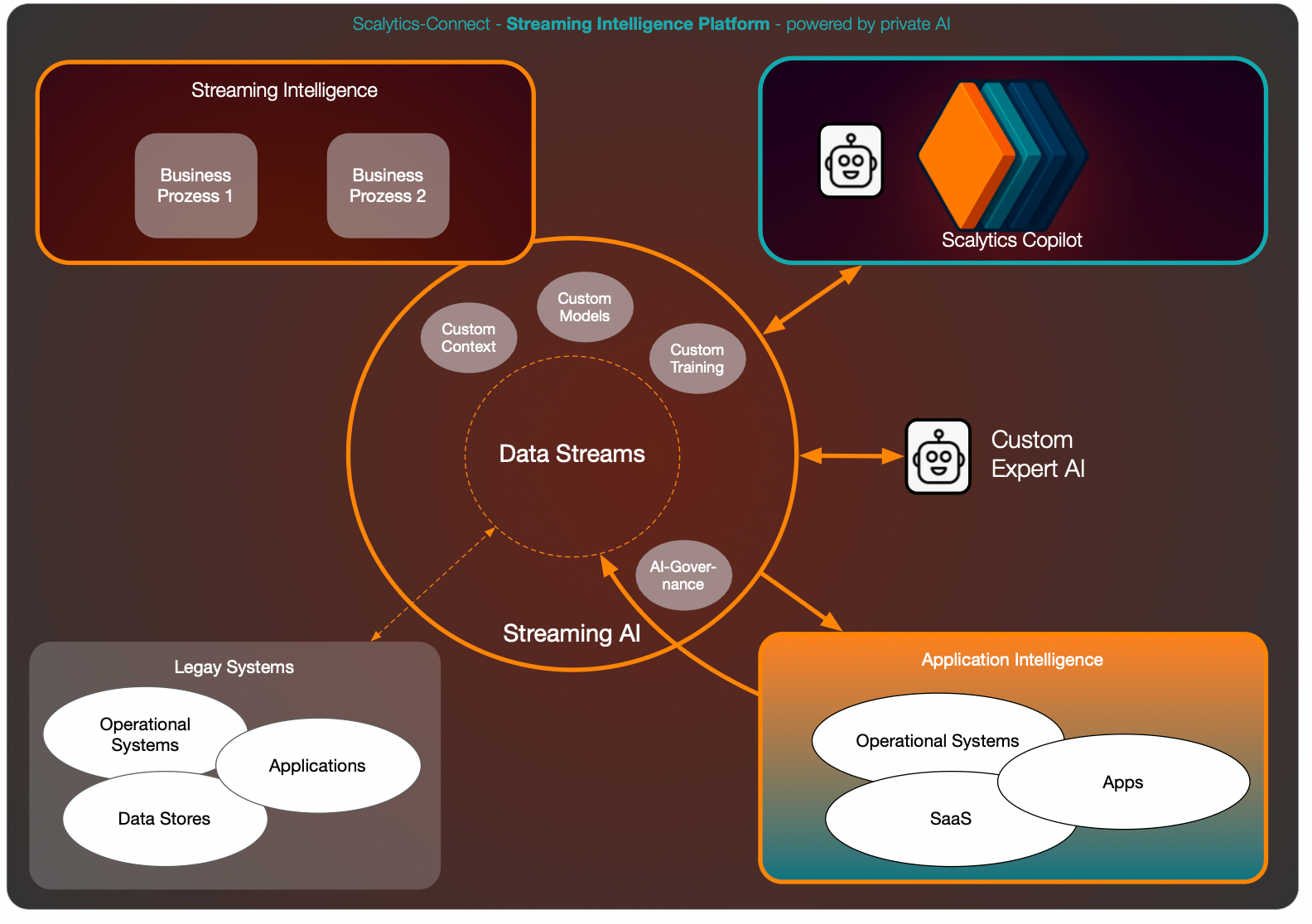

Streaming Intelligence enables analytics and decisions as events occur. It processes event and data streams continuously and derives insights, actions and alerts on the fly.

The essential capability is the unification of business events and operational events in real time.

Instead of storing data and analyzing it later, stream processors such as Apache Flink operate directly on live streams to produce actionable outputs immediately.

Examples include:

- Autoscaling workloads based on real time demand

- Quality control on live data

- Risk scoring and anomaly detection as events occur

This closes the latency gap between operations and decision making.

Beyond ERP Data: Enabling Streaming Metadata for Adaptive Systems

The real advancement comes from utilizing dynamic streaming metadata: throughput, latency, error rates, inter event time distributions and temporal patterns.

Historically this information has been ignored or implemented only for niche applications.

With Streaming Intelligence, systems adapt automatically. They can:

- Train machine learning models on in flight data

- Adjust parameters during processing

- Build a live, evolving view of streams, not only schema or lineage

This creates a feedback loop between business logic and infrastructure.

Systems learn and react to conditions without manual restarts or downtime.

Human oversight remains essential for critical decisions, but the system takes care of the dynamics.

Shift Left for ERP and Operational Databases with Streaming First Intelligence

RP systems are traditionally slow to evolve.

Streaming Intelligence brings a Shift Left approach into this domain, one of the most expensive parts of enterprise IT.

Instead of long ERP modification cycles, Streaming Intelligence offers a complementary and faster alternative.

It enables:

- Insights generated directly in the stream and visible to business stakeholders

- Real time detection of bottlenecks with clear connection to business context

- KPIs, ROI metrics and risk indicators available without new warehouse projects

New metrics can be deployed in hours, not weeks. This changes how enterprises evaluate and optimize their operations.

Databricks + Scalytics: Extending the Lakehouse to Streaming and Federated Data

The Databricks partnership expands the Lakehouse beyond managed internal data. Databricks unifies batch analytics, ML and AI, but the model remains centered on controlled data inside the platform.

Scalytics Streaming Intelligence extends this reach to operational systems and distributed environments that Databricks cannot directly access. It does this without data movement.

Through federated execution, Scalytics Federated connects to heterogeneous systems such as ERP platforms, message brokers, IoT hubs and edge event networks. It executes streaming transformations where data resides and integrates these streams into Databricks through Delta Live Tables, Structured Streaming and MLflow pipelines.

This allows data engineers to use familiar Spark and Delta semantics while taking advantage of Scalytics Federated for dynamic federation across transactional and operational data in flight.

The result is continuous intelligence that extends the Lakehouse across the enterprise, from factory floor sensors to financial systems, with consistent lineage, governance and security.

Bridging Business Intelligence and Operational Technology

A mature Streaming Intelligence platform provides standardized components that connect application logic, business events and operational telemetry.

Key building blocks include:

- Smart Topics to structure events and payloads

- Event grouping and transaction tracking for temporal correlation

- Connectivity analysis across distributed systems

- Dashboards and reports generated directly from the stream

- Secure gateways, including MCP compatible access layers, for workload isolation and policy enforcement

The outcome is isolation where protection is required and transparency where insight is needed.

What’s Next: From Passive BI to Active, Streaming Intelligence

Streaming Intelligence elevates business intelligence from passive reporting to active decision support. Enterprises that combine Streaming Intelligence with private AI gain a measurable advantage in real time operations.

- It transforms the relationship between business data and technical signals.

- It removes delays, reduces manual intervention and improves resource utilization.

- It also becomes a critical capability for SaaS applications that require adaptive behavior.

Bringing adaptive and standardized components together with AI integration will become essential.

The future of business intelligence is streaming. Our mission is to bring secure, private AI into this environment and make real time intelligence available everywhere it is needed.

About Scalytics

Scalytics Federated provides federated data processing across Spark, Flink, PostgreSQL, and cloud-native engines through a single abstraction layer. Our cost-based optimizer selects the right engine for each operation, reducing processing time while eliminating vendor lock-in.

Scalytics Copilot extends this foundation with private AI deployment: running LLMs, RAG pipelines, and ML workloads entirely within your security perimeter. Data stays where it lives. Models train where data resides. No extraction, no exposure, no third-party API dependencies.

For organizations in healthcare, finance, and government, this architecture isn't optional, it's how you deploy AI while remaining compliant with HIPAA, GDPR, and DORA.Explore our open-source foundation: Scalytics Community Edition

Questions? Reach us on Slack or schedule a conversation.